PyJordan - The Docker system to run remote ML trainings.

A Dockerfile and Python binary system to execute some (complex) code :)

PyJordan was the new internal feature which was the first major improvement to the machine learning platform. Read On.

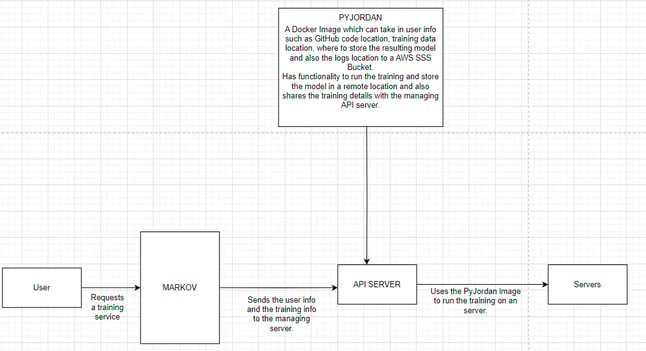

In the early days of the platform, when a user wants to run his training code, the user should have to choose a flavor (A Docker image which contains the required libraries like NumPy, pandas, etc.). As you can guess, this gave rise to a lot of management of the said images. A single release would see around 20 - 30 individual images which had to be re-built.

Only after a short time, this was the top issue which needed solving. I was part of a team of 3 developers who initiated the effort to make this issue into something which could be re-usable, downloads code and libraries on the fly, does not need the user to know the inner workings of the platform and can incorporate new features just by adding code at one place. I think the concept of robust code re-usability must be important to every developer as so many features now rely on the robust, heavily integration tested and unit tested foundations of PyJordan.

This was done by boiling down all these exclusive Docker images with mostly similar libraries to one, cohesive Docker image. So it was the point of development. A Docker image was built which fundamentally does the following: Start a Django server, download all of the user's training code, download all the required libraries mentioned in said code, download all the training data from a remote location (Can be an AWS S3 bucket or HIVE), run the training code, upload the resulting ML model to another remote location configured by the user.

This solved a lot of problems. The user need not care about which "Flavor" to use and can simply submit their request with only minimum amount of data the user should care about. The Image could dynamically start up a Django server and execute all the code programmatically and also upload the model to the desired location of the user.

When a feature request came in which was to upload the logs file to a location, this was done very quickly. The dynamic nature of PyJordan allowed this feature to be deployed only through a couple of lines code. A future feature was also incorporated very quickly which was to track and send model rubrics like training time and error rate as the training went on. This feature fundamentally changed how the platform worked and developed features in the future.

Time taken from whiteboarding to production - 5- 6 Sprints.

Tech stack used: Python, Docker, Django, Python Shiv.

Thank you for reading till the end, here is a cookie :)